CLOSING THE GAP: Teaching Computers to Reliably Identify Objects in Images using Large-Scale Unannotated Data

By Joshua Preston

Training computers to detect and reliably identify objects in images is a challenge even with advanced computing power. Humans need only see common objects a few times to learn what they are and the lesson sticks. Computer programs require massive annotated datasets, where humans draw boxes around all objects and identify them. One way to get around this is to train computers to use unlabeled data, by allowing the computer to make predictions of what is in the image (called “pseudo-labels”) and training on those predictions. If the computer gets the object classification wrong—such as labeling a walrus as a dog—the faulty data may be used in the future and the system risks becoming unreliable.

Zsolt Kira, assistant professor in Interactive Computing, and his team, including Ph.D. student Yen-Cheng Liu and collaborators at Meta, are working to train computer programs to more accurately classify objects in images and mitigate the risks of mislabeling data in large-scale real-world unlabeled datasets.

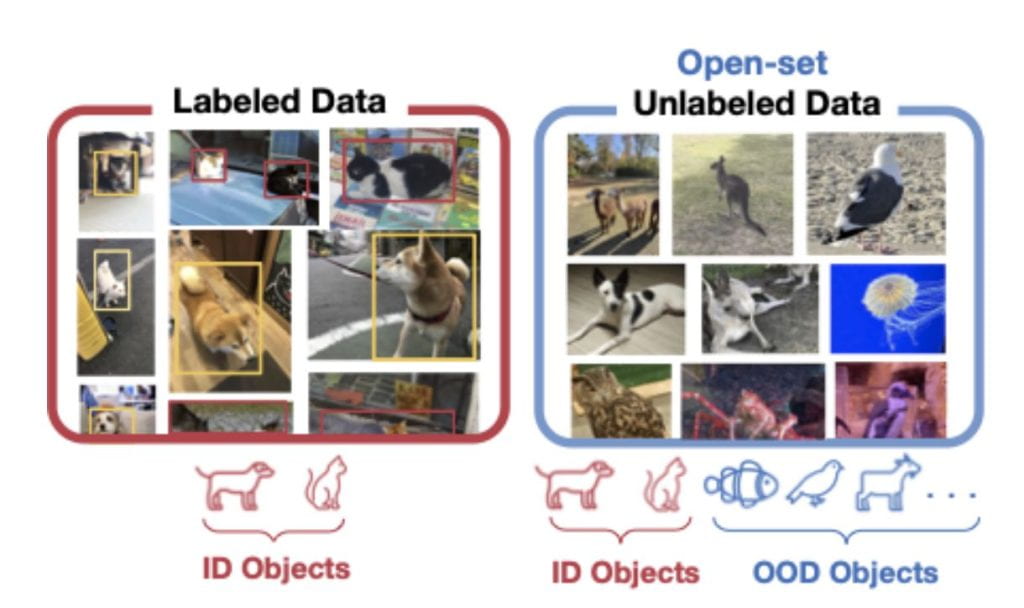

New research from the group is some of the first to explore how computer programs implement semi-supervised object detection or SSOD—using labeled data to apply the pseudo-labels to raw images that haven’t been labeled—for open datasets found on the internet.

Image-based object detectors are usually applied to closed or curated datasets where unknown objects are not present. The team’s goal is to correctly identify objects in open datasets, from the web and elsewhere, while making sure unknown objects are not erroneously added.

“Developing methods that can be applied to ‘in-the-wild’ data, which often include many types of objects that are not relevant, is important so that we can scale to millions or billions of unlabeled object examples,” said Kira.

The research team discovered that part of the solution in working with open datasets lie with optimizing another tool in the process —an out-of-distribution (OOD) detector—that determines if the object is from a known category, or distribution, of objects. In one example, dogs and cats may be two known categories, but if a goat slips by and is added to either category, it corrupts the data. The out-of-distribution detector should be robust enough to filter out any unknown objects.

The team did extensive studies and found that offline OOD detectors performed very well to remove image object data that should not be part of the object distribution (the goat for example). This was due in part to the OOD detector’s architecture being independent of the object detector itself.

Conversely, online OOD detectors, which try to do both object detection and OOD detection at the same time, did not perform as well and accurate object detection degraded over time.

“This work has the potential to significantly improve object detectors for some of the popular applications, such as self-driving cars which can collect large amounts of unlabeled data as they drive around,” said Kira. “Other areas such as medical domains can leverage our methods as well, since only skilled doctors can annotate the data.”

This new approach to SSOD using open datasets and implementing offline OOD detectors achieved state-of-the-art results across existing and new benchmark settings, according to the team’s findings.

The work also provides new directions in designing more accurate and efficient OOD detectors for future research. Kira notes this is especially true because obtaining very large amounts of real-world unannotated data (think cell phone images) is much easier to do than to have humans laboriously annotate them.

The research is published in the proceedings of the European Conference on Computer Vision, taking place October 23-27, 2022. The research paper Open-Set Semi-Supervised Object Detection is co-authored by Yen-Cheng Liu, Chih-Yao Ma, Xiaoliang Dai, Junjiao Tian, Peter Vajda, Zijian He, and Zsolt Kira.

This work was sponsored by DARPA’s Learning with Less Labels (LwLL) program under agreement HR0011-18-S-0044. The researchers’ views and statements are based on their findings and do not necessarily reflect those of the funding agencies.